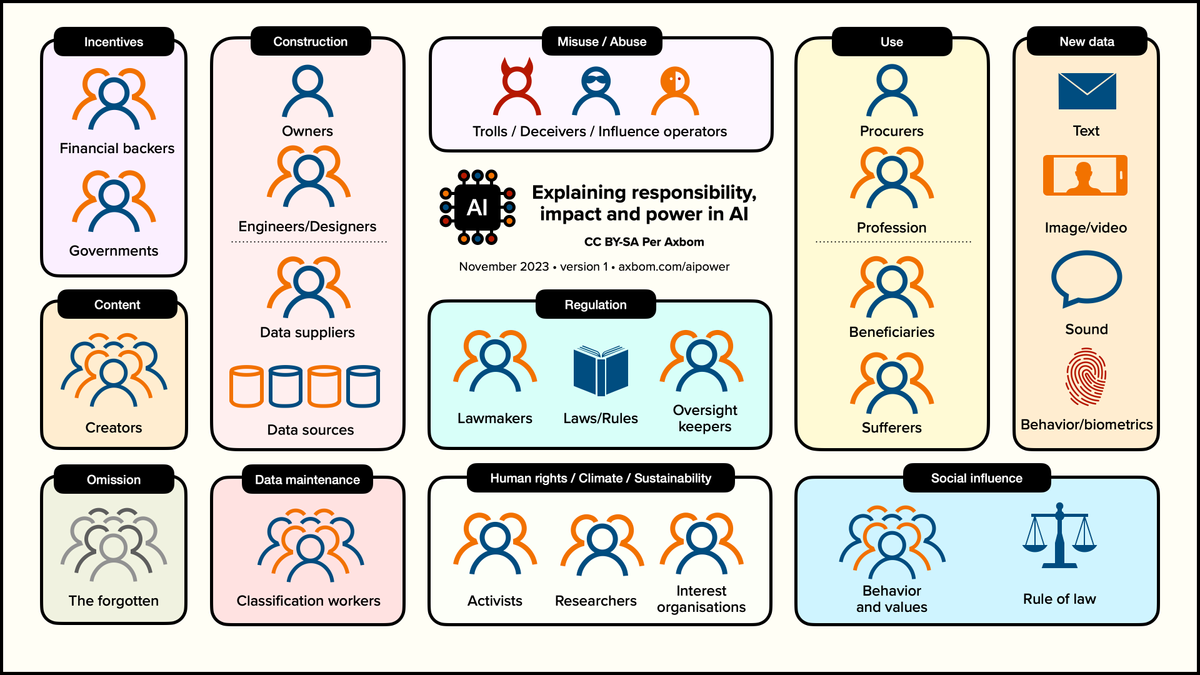

Explaining responsibility, impact and power in AI

I made this diagram as an explanatory model for showing the relationships between different actors when it comes to AI development and use. It gives you something to point at when showing for example who finances the systems, who contributes to the systems and who benefits or suffers from them. Below are explanations of each grouping in the chart.

Download the diagram in PDF format:

Diagram content

Incentives

Two stakeholders inhabit the incentives grouping:

- Financial backers hope to gain financially from investing in AI. They invest heavily in giving the impression of AI as a benevolent, beneficial and necessary technology that they are the best protectors of.

- Governments have a potentially more more complex spectrum of motivations as they are also interested in the growth of the economy, but likewise wish to protect aspects of wellbeing amongst the citizens they serve. Governments will then pursue regulation efforts that encourage the growth of AI, protect domestic companies and resources as well as ensure that harm to the population is minimised.

Construction

- Owners oversee the development of models and tools. Much like financial backers they position themselves in the role of having superior knowledge of how the technology should be built and deployed. They also have a vested interest in making sure there are as few actors as possible who can build and maintain the new technology.

- Engineers and designers carry out the work required to bring the tools and models to market. They work under constraints of time, money and access to data, compute power and manual training. They will also be making decisions around how tools will appear to users, when for example making them appear more or less human in their output.

- Data suppliers can be internal or external with regards to specific companies, but they play an important role in deciding what content is made available for developing the computational models used by the consumer-facing tools.

- Data sources can be made up of a plethora of different research databases, online material, books, PDFs and data that is sourced as people are using the tools.

Content

- Creators of content aren't always aware that their content is being used by AI companies. When they are, they aren't always happy about it.

Omission

- The Forgotten refers to the massive corpus of human content (art, literature, physical designs, knowledge, wisdom and more) that never makes it into training because of language, bias or lack of digital representation.

Data maintenance

- Classification workers are often low-paid workers spread out across the world, engaged in labelling, tagging and evaluating content, and making sure the systems produce as little controversial content as possible. They are part of a workforce making systems appear more autonomous than they really are.

Misuse/Abuse

- Trolls make use of AI to enable mischief and cause suffering.

- Deceivers often make use of AI to scam people out of money. They can also for example generate content and make use of ad networks to make money.

- Influence operators can operate under the instruction of state actors, warmongers, tyrants or criminals to create conflict based on false information. AI provides capabilities that can accelerate this type of influence, for making lies as well as for spreading them.

Regulation

- Lawmakers work to oversee what is happening in technology, understand the risks and benefits and regulate accordingly. They are often closely courted by Financial backers and Owners.

- Laws and regulations often exist that can and are employed in some cases (such as copyright) and at other times there are no laws in place, for example producing deepfakes with the likeness of others is not illegal in many countries.

- Oversight keepers include both those responsible for ensuring law and order is upheld, and those helping organisations (for example corporate lawyers) understand what they can and can not do with new tools. Sometimes the oversight keepers themselves deploy AI tools, such as law enforcement using facial recognition.

Human Rights / Climate / Sustainability

- Activists work to create awareness and influence public opinion on how human rights abuses are enabled by new technology and how vulnerable populations suffer even as advantaged people benefit further.

- Researchers spend time looking at how the tools impact and influence politics, social contracts, the economy and much more. They provide important insights about key elements to monitor for gauging if the net impact of technology is positive and where to focus regulation efforts.

- Interest organisations (often charities, human rights organisations and environmental communities) keep a close eye on what is happening within their respective spaces and release reports on observations and measured effects.

Use

- Procurers are responsible for purchasing and making available tools within organisations. They play an important role in evaluating and ensuring safe implementations.

- Profession represents all the people using AI in their everyday work with the intent to boost their own performance, make new stuff for others or invent new ways of working.

- Beneficiaries includes anyone who articulates a clear and obvious experience of benefitting from AI tools, as well as those who benefit when others use AI to evaluate them for example.

- Sufferers includes anyone who experiences they are worse off as a result of AI tools, or suffer unknowingly as a result from someone else deploying AI, also for example when evaluating them in job, social or performance contexts.

People can be both beneficiaries and sufferers if they use or are exposed to many different tools.

New data

The purpose of this section is to emphasise how everyday work with digital tools, and continued exposure to tools that consume content, also means giving up content to further the development of AI tools. These are examples of types of content.

- Text is used to train AI tools and can be captured in documents, tools, forums, messages, blog posts and more.

- Image/video can be captured from online meetings, giving apps access to photo libraries, or using specific apps for recording or photos (such as apps for filters, face swap or aging effects).

- Sound is captured from meetings, calls, videos and digital assistants as you or someone else is using them.

- Behavior/biometrics is growing in its ability to capture unique, individual identification data from keyboard typing, voiceprints, gait, heartbeat and more. Behavior also refers to data that is captured to infer location, shopping preferences, political convictions, sexual activity and more.

Social influence

Finally it's important to consider how technology can influence how people interact with each other in both small and big communities.

- Behavior and values can change when expectations of work change, or when trust levels are affected by growing amounts of misinformation or impersonal outputs. Looking at recent history we can see examples of how interpersonal communication has changed rapidly with the advent of smartphones.

- Rule of Law refers to everyone being equal under the law, in accordance with democratic values. It is important that individual power to speak up against AI/algorithms is maintained, requiring transparency and due process. There is reason to believe that black box algorithms making decisions that affect people's lives (in the public sector and elsewhere) can make it harder to provide the transparency that everyone is entitled to, and hence also more difficult to apply corrections when people are mistreated.

Updates

The diagram will be updated when I feel that important issues arise that should be communicated here. I am also open to feedback and suggestions. The latest version is always on this page, and the URL is visible in the diagram.

Image description

A chart made up of different sections containing stakeholders or subject areas.

The title is "Explaining responsibility, impact and power in AI".

On the left side are:

- Incentives: Financial backers, Governments

- Construction: Owners, Engineers/Designers, Data suppliers, Data sources

- Content: Creators

- Omission: The Forgotten

- Data maintenance: Classification workers

In the center are:

- Misuse/Abuse: Trolls, Deceivers, Influence operators

- Regulation: Lawmakers, Laws/Rules, Oversight keepers

- Human Rights/Climate/Sustainability: Activists, Researchers, Interest organisations

On the right side are:

- Use: Procurers, Profession, Beneficiaries, Sufferers

- New Data: Text, Image/Video, Sound, Behavior/biometrics

- Social influence: Behavior and values, Rule of Law

Comments

November 8, 2023 – Ton Zijlstra:

Good stuff Per, thank you. I was thinking about the stakeholders you mention versus the ones listed in the EU AI Regulation as having specific responsibilities (for an AI application to be admissable on the EU market).

The EU AI Act looks at manufacturers, your owners, and at procurers you mention. But also at distributors. And not just the professionals using the AI tools to generate output for their tasks, but also the people using the outputs of AI tools from others.

In the EU AI Act this is so that an otherwise irresponsible / illegal tool still has access to the EU market, by doing all the work outside the EU and only selling the results into the EU. There's a responsibility here maybe to include, with wanting to know the provenance of certain generated output, akin to wanting to know where the clothes you wear were manufactured and under which conditions.

November 9, 2023 – Per (author):

Good points Ton! Thank you. I'll try and get through the AI Act and think about how some of those aspects could perhaps be made more clear in the diagram.

Comment